- #Logstash docker ip address how to

- #Logstash docker ip address install

- #Logstash docker ip address software

- #Logstash docker ip address download

#Logstash docker ip address download

The rest of the command will download from the Elasticsearch website, unpack, configure the permissions for the Elasticsearch folder, and then start Elasticsearch.īecause of the nature of Docker containers, once they are closed, the data inside is no longer available and the new running Docker image will create a brand new container.

It will look something like this: java_image latest 61b407e1a1f5 About a minute ago 503.4 MB Assembling an Elasticsearch ImageĪfter building the base image, you can move onto Elasticsearch and use java_image as the base for your Elasticsearch image. To ensure that your image has been created successfully, you type docker images into your terminal window and java_image will appear in the list that the Docker images command produces.

#Logstash docker ip address install

RUN apt-get install -y python-software-properties software-properties-commonĮcho oracle-java8-installer shared/accepted-oracle-license-v1-1 select true | debconf-set-selections & \Īdd-apt-repository -y ppa:webupd8team/java & \Īpt-get install -y oracle-java8-installerīy calling on docker build -t java_image, Docker will create an image with the custom tag, java_image (using -t as the custom tag for the image). The Dockerfile for java_image: FROM ubuntu:16.10 For the sake of this article, you will use Ubuntu:16.10 with OpenJDK 7 and a user called esuser to avoid starting Elasticsearch as the root user. First, let’s make sure that you have all of the necessary tools, environments, and packages in place. Let’s say that you need to create a base image (we’ll call it java_image) to pre-install a few required libraries for your ELK Stack. You can then create new containers from your base images. Each command that you write creates a new image that is layered on top of the previous (or base) image(s).

#Logstash docker ip address how to

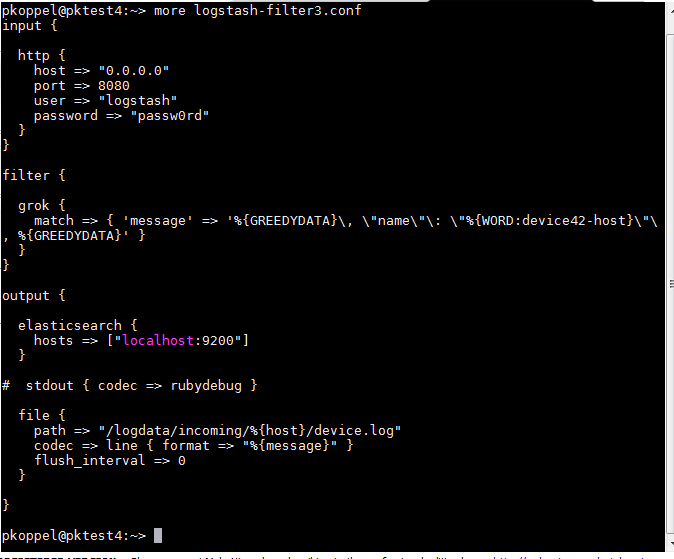

If you are unsure about how to create a Dockerfile script, you can learn more here.ĭocker containers are built from images that can range from basic operating system data to information from more elaborate applications. This section will outline how to create a Dockerfile, assemble images for each ELK Stack application, configure the Dockerfile to ship logs to the ELK Stack, and then start the applications. This guide from Logz.io, a predictive, cloud-based log management platform that is built on top of the open-source ELK Stack, will explain how to build Docker containers and then explore how to use Filebeat to send logs to Logstash before storing them in Elasticsearch and analyzing them with Kibana. Consequently, Filebeat helps to reduce CPU overhead by using prospectors to locate log files in specified paths, leveraging harvesters to read each log file, and sending new content to a spooler that combines and sends out the data to an output that you have configured. Filebeat also needs to be used because it helps to distribute loads from single servers by separating where logs are generated from where they are processed.

Kibana is a visualization layer that works on top of Elasticsearch.įilebeat is an application that quickly ships data directly to either Logstash or Elasticsearch. Logstash is a log pipeline tool that accepts inputs from various sources, executes different transformations, and exports the data to various targets. Elasticsearch is a NoSQL database that is based on the Lucene search engine.

The ELK Stack is a collection of three open-source products: Elasticsearch, Logstash, and Kibana.

#Logstash docker ip address software

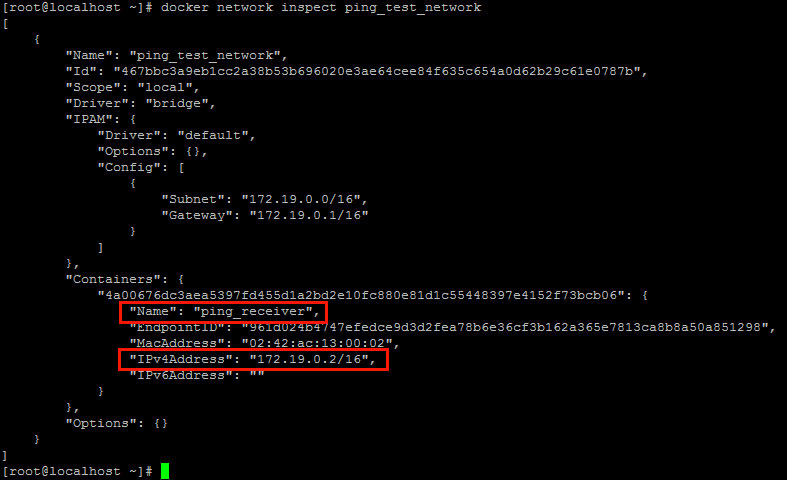

The popular open source project Docker has completely changed service delivery by allowing DevOps engineers and developers to use software containers to house and deploy applications within single Linux instances automatically. In Docker Compose v2.0.0 it appears to silently continue using the old network configuration binding to 0.0.0.This guide from Logz.io for Docker monitoring explains how to build Docker containers and then explores how to use Filebeat to send logs to Logstash before storing them in Elasticsearch and analyzing them with Kibana. If you forgot to do this in Docker Compose v1, it printed an error saying the network settings in the project and the daemon were different and conflicted. NOTE/WARNING: Like in the comment, you need to delete your pre-existing Docker networks after applying this patch.

+ for name, conf in ems(): + if conf.driver and conf.driver != "bridge": + continue + conf.driver_opts = conf.driver_opts if conf.driver_opts is not None else + Networks = Network(client, name, 'default') a/compose/network.py +++ b/compose/network.py -256,6 +256,12 def build_networks(name, config_data, client): ".name": "docker0 ",Īnd that's explains that create a new network with the option ".host_binding_ipv4"="192.168.17.1" can solve this problem as well.ĭiff -git a/compose/network.py b/compose/network.py

0 kommentar(er)

0 kommentar(er)